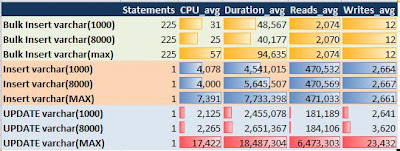

Essentially, I found that there is a performance cost to using VARCHAR(MAX) over VARCHAR(8000). This would suggest that you should only use VARCHAR(MAX) when you need to. (Ie you have data that can be longer than 8000 bytes.) I would add to this by saying that data types should always be as tight as practical. It would not be a good practice to make all varchar data types MAX. For example, if you application has a screen that only permits 30 characters, it would make sense to make the data type VARCHAR(30). Although, I could make exceptions if I thought this might be increased in future.

Notice; in the results of my test that INSERT and BULK INSERT are only penalised by cpu. UPDATE statements appear are penalised by reads and writes too. Consequently, inserts were only slower by a factor of 2, while updates were slower by a factor of 7. All tests were inserting or updating 110,879 rows taking 42MB. Compression was not used.

by reads and writes too. Consequently, inserts were only slower by a factor of 2, while updates were slower by a factor of 7. All tests were inserting or updating 110,879 rows taking 42MB. Compression was not used.

by reads and writes too. Consequently, inserts were only slower by a factor of 2, while updates were slower by a factor of 7. All tests were inserting or updating 110,879 rows taking 42MB. Compression was not used.

by reads and writes too. Consequently, inserts were only slower by a factor of 2, while updates were slower by a factor of 7. All tests were inserting or updating 110,879 rows taking 42MB. Compression was not used.

4 comments:

could be possible to post the script? I would like to test the code in 2005.

Sorry, I don't have the package any longer. It's really just a matter of having some data (a table with several million records). Then simply create an SSIS package to load it into a table, which you can drop at the end of the test.

Be sure to use performance options such as fast load that you would normally use.

Thanks! I was hesitant putting varchar(max) and gumming up the whole system just for one report requirement. I think if somebody's too wordy for 8000, they should be an author.

Hi, Very different content from other posts.Keep on posting such useful post!!!

http://sqlsolutions.com

Post a Comment