Tabular is simpler than Multidimensional, it does not support features such as write-back, complex calculations, named sets, many to many relationships and very large cubes. So why would you use Tabular? There might be several answers, but the one I am most interested in is Tabular models are held entirely in memory, providing exciting performance potential.

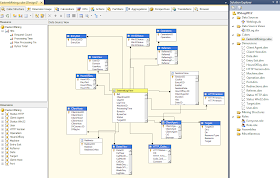

To test the memory aspects of Tabular versus Multidimensional, I created a large-ish cube in both. Since Tabular is entirely in-memory, I had to experiment with a cube that didn't require more memory than I had available in my x64 server. I had 8GB which translated to a fact table of about 450 Million rows from my WebLogs database. I created equivalent Tabular and Multidimensional cubes with the same fact measures and 11 dimensions.

To test the memory aspects of Tabular versus Multidimensional, I created a large-ish cube in both. Since Tabular is entirely in-memory, I had to experiment with a cube that didn't require more memory than I had available in my x64 server. I had 8GB which translated to a fact table of about 450 Million rows from my WebLogs database. I created equivalent Tabular and Multidimensional cubes with the same fact measures and 11 dimensions.During the design process, Tabular model imports and massages the data. I find this a bit annoying, but, it might be good feedback to a less experienced designer. In processing; both models completed in less than 60 minutes, with the Tabular model about 20% faster.

Now, when I ran queries on the two models, Tabular was able to answer all queries with negligible IO. The Multidimensional service was performing IO and its memory usage was fluctuating with the queries and managing its cache. Both models were able to satisfy my random queries quickly. However, it would be fair to say that the Tabular model returned random results faster, which, was largely due to it having all data in memory. It was also noticeably faster at distinct count (calculated) queries. The Multidimensional model tended to be just as fast when the submitted query required similar data to a recently executed query (answer in cache). It was a little slower for queries on data that hadn't been queried recently (answer not in cache). But it still returned results in less than 2 seconds for almost all random queries. Note, my server did not have a solid state drive, which would have assisted the Multidimensional model. Both models had only one partition, and I was interested to see that some Tabular queries were able to consume all 4 CPUs concurrently.

Now, when I ran queries on the two models, Tabular was able to answer all queries with negligible IO. The Multidimensional service was performing IO and its memory usage was fluctuating with the queries and managing its cache. Both models were able to satisfy my random queries quickly. However, it would be fair to say that the Tabular model returned random results faster, which, was largely due to it having all data in memory. It was also noticeably faster at distinct count (calculated) queries. The Multidimensional model tended to be just as fast when the submitted query required similar data to a recently executed query (answer in cache). It was a little slower for queries on data that hadn't been queried recently (answer not in cache). But it still returned results in less than 2 seconds for almost all random queries. Note, my server did not have a solid state drive, which would have assisted the Multidimensional model. Both models had only one partition, and I was interested to see that some Tabular queries were able to consume all 4 CPUs concurrently.The resulting databases, on disk, were not too dissimilar in size, Tabular=7.5GB, Multidimensional=5.4GB. You can see from the Task Manager, above, that at idle, the Tabular service was holding most of the 8GB of memory (peaking at 10.7GB during processing), leaving very little for any other service (SQL Server was squashed down to 350MB). The Multidimensional service was only holding 280MB and had a peak working set of 4.9GB (peak during processing). This is a bugbear of mine, that the Multidimensional service doesn't hold onto more memory, when it is available. Something else you should be aware of, Tabular service will hold onto memory even though there are no queries active and memory is short, which could degrade other services on the same server. I had to shut down Tabular when testing Mulitdimensional, so that it had a fair go at the RAM.

For all the effort Microsoft has put into creating the Tabular model, I am left questioning why they didn't enhance Multidimensional's memory management to use memory when available. The Multidimensional service will not hold a large cube in memory even though there is plenty of RAM to do so. The caching in multidimensional service has several components, but the aggregation cache doesn't appear to exceed 2GB. It also relies on the Windows file cache, which doesn't appear to offer much more caching ability. This is particularly noticeable with cubes larger than 4GB and smaller than the available memory. See my blog Why SSAS Doesn't Cache The Entire Cube? This is the most significant performance limitation I have come across with large Multidimensional cubes and the reason I strongly recommend solid state drives for very large cubes. It is also why adding RAM to a server stops being advantageous after a point. Enhancing Multidimensional to use more RAM, when available, would have diminished the need to have a second olap technology.

In summary, I would like to make the reader aware that when employing Tabular models, the model must stay within the memory constraints of the server. So, if your server has 100GB of RAM, and is dedicated to your Tabular model, your Tabular model can not be larger than 100GB. If you try to process larger models, they will fail with a memory error. In a practical sense, more RAM is required to accomodate updating in parallel with querying the processed version. Also be aware that Tabular models will consume memory, which could negatively impact other services. On the other hand, if you can ensure your Tabular models are within RAM limitations, query performance will be reliably fast and completely independent of IO stress. For my enterprise customers, since Multidimensional has no absolute size limit and is much more functional, it is likely to be their cube of preference for a while longer, with Tabular an option for specialty needs.

Additional note: I've come across another feature that I have found to be quite important in choosing between Tabular and Multidimensional. Tabular stores data by compressing columns, every record is stored. Whereas Multidimensional stores data by cells. The aggregation of data can offer huge compression, then the cells, themselves are compressed. This provides a significant pro and con to Tabular. The pro is that a calculation can filter records by measure values. Eg, % of transactions with Net Sales > $100. Multidimensional can do this too, but it would have to be baked into the design with a dimension on transaction value. The associated con for Tabular is that if you double the number of records in a Tabular model (with the same identical records) the model size is approximately double. With Multidimensional, you can double the number of records (with the same identical records) and the measure group size is largely unchanged, since it will have the same number of cells. So the size of Tabular versus Multidimensional models can vary quite a lot.

There are a few things that Multidimensional supports that Tabular doesn't. In my experience, the most salient difference is that Tabular does not support composite Primary Keys where Multidimensional does.

There is now an msdn article on this topic. http://msdn.microsoft.com/en-us/library/hh994774.aspx

ReplyDeleteThe article on MSDN points to a Word Doc. Here is a link to a Google Doc of the Word Doc if you do not have Office to view it: https://docs.google.com/file/d/0BwgLvVq0rcS7Y3J1XzhWVFhEa1k/edit?usp=sharing

ReplyDeleteThanks for this very interesting comparison Richard. I realise it's over a year now, but I was wondering if you can recall whether your Multidimensional model included Aggregations? I would expect those to increase the Process time but speed the Query time.

ReplyDeleteI do remember that I had some aggregations. About 100. I'm not so sure of the % level, but I think it was around 20%

ReplyDeleteDear Richard,

ReplyDeleteYou provided a very helpful information on your blog. I will really appreciate your effort.

Further, as i am new in this area therefore will you please differentiate between MOLAP and Tabular Model because i got confused in both of it. Thanks!

Richard, would you like to speak for SQL Saturday BI Edition in Baltimore in May of this year?

ReplyDeleteHi Salva, Yes, I am flattered and would love to. I live in Sydney, so could you help me with flights?

ReplyDelete